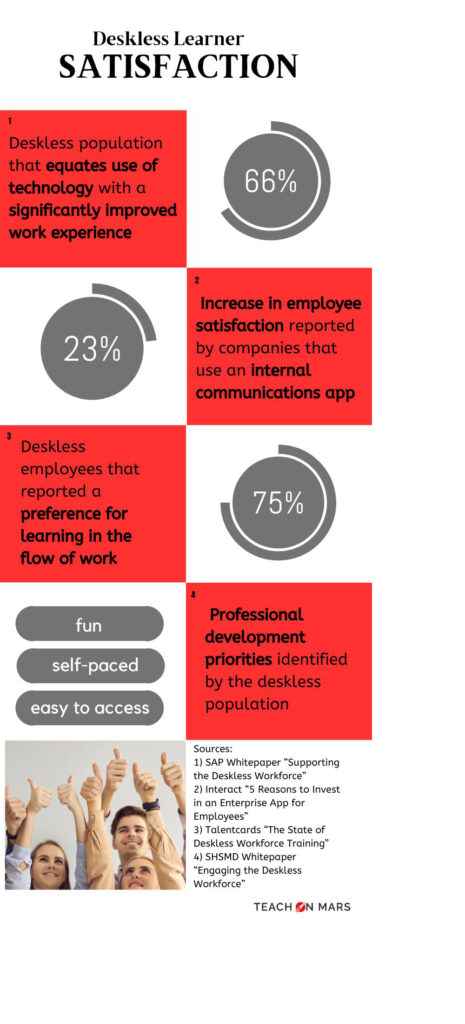

Our immediate reaction upon completing a training can be measured in terms of satisfaction. While positive training experiences appear to augment employee motivation and commitment, the inverse is also true. Research has revealed several factors that contribute to learner satisfaction. By cultivating a positive user experience, you can nurture active engagement.

Through research in Italy encompassing 3000 employees with access to 300 different training courses, Giangreco1 noted, “The satisfaction of the trainees influences their propensity to study, which can modify behavior to the point of improving individual and organizational results.” He identified significant positive correlations between learner satisfaction and the perception of efficiency, usefulness and trainer performance. Of the three, he found that perceived usefulness has the greatest impact on learner satisfaction.

Wang2’s studies in Taiwan complement this notion. He views satisfaction as a response rather than a process and compares learners to customers. Importance is placed on the perceived quality of a service which results in satisfaction. Wang defines e-learner satisfaction as being derived from user interface, content, customization, learning community and learning performance.

Let’s take a closer look at each of these facets.

Subjective satisfaction is factored into systems usability assessments such as Nielsen’s which strive to enhance the user interface of systems like websites and applications. To be considered user-friendly, a platform needs to be easy to use and quick to learn. This is especially important when usage occurs on a discretionary basis such as in asynchronous or mobile learning.

But how can you measure subjective satisfaction?

Nielsen suggests concluding with a short questionnaire. While a single learner’s reply is subjective, compiling answers from multiple users provides a more objective measure of user experience. Incorporating an end of training survey can provide valuable insight into strengths and weaknesses, which can then be reincorporated into course design to improve content, organization and presentation. Below are a few examples of survey questions to solicit qualitative and quantitative feedback.

| Survey Questions to Solicit Learner Feedback | |

| Qualitative information | Which aspects did you find most enjoyable? Did you encounter any challenges/frustrations while completing this training? What suggestions do you have for improving this learning experience? Did you achieve your learning goal/objective? Explain why or why not. Which content would you like to see added in the future? Do you feel that the app/e-learning platform supports your preferred learning style? Please explain. |

| Quantitative information | How would you rate your overall satisfaction with this training course? (scale: 1 to 10) How would you rate the clarity/effectiveness/content of this training course? (scale: poor to excellent) How likely are you to recommend this training to a friend or colleague? (scale: very unlikely to very likely) |

We know that in order for training to be effective, participants must perceive content as valuable. This requires viewing benefits (developing skills and competence) as outweighing costs (time and effort).

A retail sales training catalog might include anything from brand culture, to product collections or client experience. When employers are able to offer a rich catalog of relevant training courses, learners can access content that addresses their needs. Customized content and instant access to critical information empower autonomous learning.

Artificial Intelligence is a powerful ally in curating content and creating responsive learning experiences. Also, activities such as profiling can help learners connect to course content and tailor the learning experience based on their prior knowledge or interests.

Interactive elements require learners to take on a more dynamic role. Parr studied gamification in training and found that it is “highly effective for teaching, retention and engagement.” Multiple choice questions, naming, and sorting activities are all ways to help the learner assess their level of understanding. Additional practices that fall under the umbrella of gamification include incorporating points or badges to document achievement.

Self-determination theory, developed by psychologists Deci and Ryan in the 1980s, references a trifecta of universal psychological needs that drive human motivation: autonomy, competence, and relatedness.

E-learning done well supports them all, beginning with quality training content and followed by receiving timely feedback. It can be received in the form of likes and comments from peers within the learning community, or in response to applying new understandings within gamified activities. Developing a sense of belonging and augmenting competence through learning performance contribute to satisfaction.

Amongst scholars, Kirpatrick’s hierarchical model of training outcomes is viewed as the most reliable way to assess the effectiveness of training.

- Level 1 addresses our immediate reaction to the training.

- Level 2 focuses on learning that has occurred.

- Level 3 involves applying learning to exhibit a change in behavior.

- Level 4 requires improving results or performance.

Though time and finances often limit organizations’ capability to evaluate beyond level 1, substantial impact can still be made by focusing on learner perception.

For further information on how to incorporate surveys, profiling or gamified activities into training consult our related article.

- Antonio Giangreco, expert in Industrial Relations, is currently a professor at the IESEG School of Management in Lille, France. In his article Trainees’ reaction to training: an analysis of the factors affecting overall satisfaction with training (2009,) cowritten with Antonio Sebastiano and Riccardo Peccei, he examines trainees immediate reaction to training and analyses the overall factors that contribute to learner satisfaction. ↩︎

- Yi-Shun Wang is a professor of information management at the National Changhua University of Education in Taiwan. In his publication, Assessment of learner satisfaction with asynchronous electronic learning systems (2003), he defines how to measure learner satisfaction in asynchronous e-learning systems using a comprehensive model of his own construction. ↩︎

With a passion for crafting engaging learning experiences, Melissa joins the Academy after a vast career in international education. In addition to designing content for the Teach on Mars application and animating onboarding sessions, in line with masters studies in pedagogical engineering, Melissa is researching the relationship between presentation of information, satisfaction and retention in microlearning.